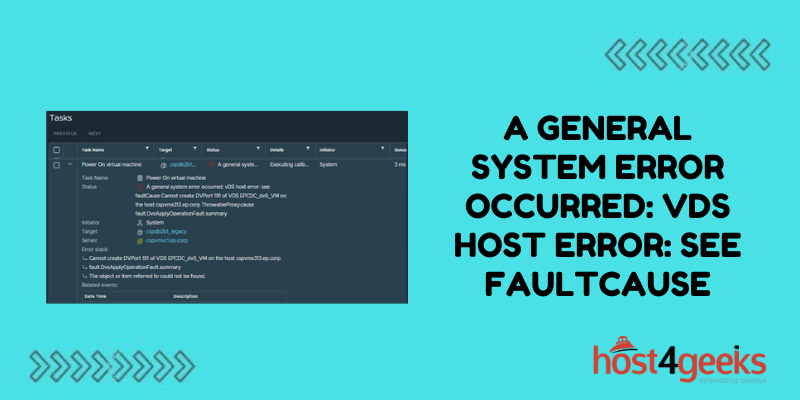

Encountering the obscure “A general system error occurred: vDS host error: see faultCause” message can be puzzling for VMware administrators. This error points to an underlying failure within the networking virtual distributed switch (vDS) host infrastructure. Tracking down the root trigger requires methodical log analysis and component testing.

When confronted with this vDS fault, avoid reactionary measures that could further destabilize things. Instead, proactively arm yourself with practical knowledge to debug and resolve the issue. This guide explores systematic troubleshooting approaches for dealing with that enigmatic vDS host error message.

Decoding the Actual Error Message

The “see faultCause” portion of the message implies that additional exception details were logged. But the main event merely states the nondescript “general system error”. Finding and deciphering the faultCause yields more actionable clues.

Scanning Logs for Common Precursors

Precursor errors often surface prior to the general system fault. Monitor logs leading up to the failure for telltale warnings around:

Network Misconfigurations

Improperly configured distributed switches or port groups misdirect traffic, overload infrastructure, and cause cascading system failures.

Resource Constraint Violations

Compute or memory contentions trigger capacity limits and failover malfunctions inside resource pools.

Host Compatibility Issues

Mismatch between the ESXi host version and the vCenter VDS version brings about undesirable edge-case scenarios.

Analyzing Failure Logging Data

vCenter, ESXi hosts, vDS systems, and virtual machines involved in the fault each log relevant troubleshooting data around the time of failure.

vCenter VPXD Logs

The vCenter Server service (VPXD) records faults related to vDS connection issues, switch failures, rollback errors, and multi-host infrastructure problems.

ESXi Host Logs

Examine ESXi syslog and VMkernel logs for the affected hosts reporting vmnic disconnects, VDS client failures, or rolling back transactions.

Virtual Machine Logs

Inspect verbose VM logs along with guest OS application logs for transferred exceptions leading up to the system fault.

Testing VDS Failover Capabilities

Force a failover to confirm redundancy capabilities and determine whether problems exist isolated around particular environments:

Multi-Host Migration

Try vMotion live migration of VMs across different hosts and clusters while monitoring syslog and performance metrics.

Maintenance Mode Testing

Place host interfaces in maintenance mode to simulate infrastructure component failure scenarios.

Forced Service Restarts

Stop and restart VDS processes to validate if certain startup sequences or health checks contribute to the issue.

Root Cause Analysis

Connecting troubleshooting dots allows narrowing down to the specific triggers behind the scenes. Decode faultCause specifics alongside contextual logging details for precise root cause discovery.

Linking Event Sequence

Correlate timestamped events chronicled across distributed hosts to piece together the complete error sequence flow.

Recognizing Failure Patterns

Match logged events, warnings, and error signatures against known issues catalogs to identify resemblance towards documented scenarios.

Differential Comparisons

Contrast configurations, topology designs, software discrepancies, and operations flow against healthy environments to spot differences influencing the fault manifestation.

Resolving the Core Problem

Armed with root cause insights, case-specific remedies can be formulated to eliminate the system error danger moving forward:

Correcting Misconfigured Components

Tweak distributed switch settings, port group parameters, teaming policies and networking options to comply with compatibility needs and environmental norms.

Upgrading Incompatible Versions

Bring VMware infrastructure layers atop vSphere like ESXi hypervisors, distributed switch appliances and vCenter manager up to consistent qualified revisions or hot patch levels.

Right-sizing Constrained Resources

Allocate additional CPU, memory, storage or networking headroom to alleviate contention issues, enable fault tolerance capabilities and support failover mechanisms.

Preventative Error Avoidance

Complement resolution tactics by instituting avoidance practices ensuring environmental safeguards and controlled change management:

Monitoring Utilization Trends

Watch for early indicators like growing resource consumption peaks that can outpace existing capacity limitations over time if left unaddressed.

Testing Infrastructure Hardening

Audit HA configurations, redundancy mechanisms, and failure scenarios via routine disaster simulations to uncover fragility risks.

Reviewing Change Impacts

Exercise due diligence around upgrading infrastructure components, especially involving interdependent virtualization stack layers with documented compatibility issues.

The Bottom Line

Staying vigilant around the operational health and steady-state resiliency of complex virtualized environments allows getting ahead of cryptic “general system error” messages like the vDS host fault. Leverage the structured troubleshooting and resolution methodology outlined as a game plan for tackling those types of vague but dangerous infrastructure warnings.