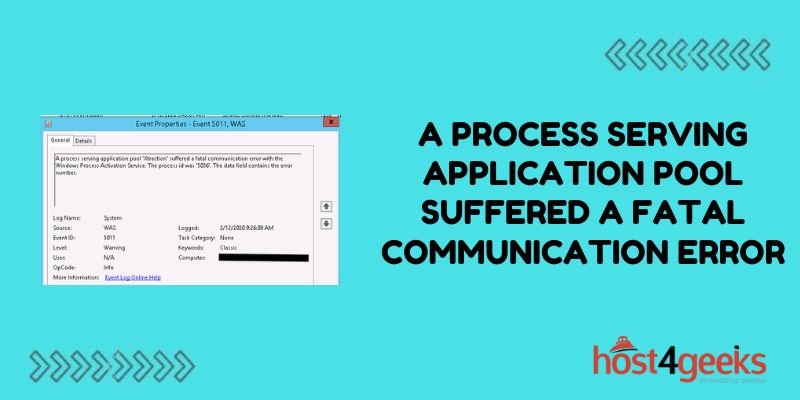

Seeing the application event error message “a process serving application pool <name> suffered a fatal communication error with the Windows Process Activation Service” can stop admins dead in their tracks from troubleshooting IIS web apps going down.

In this comprehensive guide, we’ll demystify the IIS configuration issue behind this failure, walk step-by-step through identifying root causes, apply fixes to the Windows Process Activation Service (WAS) and your application pools, and prevent future communications disruptions keeping your web apps running 24/7.

The Role of Worker Processes and WAS

To understand what’s failing, we must first explain how IIS, application pools, and Windows WAS enable processing incoming app requests across multiple worker processes.

App Pool Purpose

Application pools create distinct runtime environments to isolate apps for stability, security, and resource management. Different web apps can have independent configs.

Web Garden & Worker Processes

Each app pool spins up one or more worker processes to handle processing application code and output generation duties in response to site requests. More processes enable concurrently serving more users (web garden).

WAS Inter-Process Communication

The Windows Process Activation Service is the central traffic controller spawned by IIS which facilitates notifications and messaging between the application pool processes across a named pipe. This enables synchronizing requests, responses and state sharing across a pool.

So Web WAS provides necessary cross-process coordination. Errors here cripple communication.

Fatal Communication Failure Causes

If WAS encounters a named pipe error sending IPC messages to distribute requests/allocate processes or return output, the consumer process terminates due to the connection loss manifesting as a fatal communication error. Some common ways this happens:

Process Limits Reached

If all permissible worker processes under application pool thresholds are active, WAS cannot assign new requests anywhere. Pipe transmission fails.

Access Denials

Access control list lockdowns, strict permissions, or privilege elevation bugs prevent process access to the shared WAS pipe.

WAS Service Disruptions

OS updates resetting services, misconfigured WAS recovery options, crash events in the WAS broker process itself, or activation request surge overflow bring down communication.

Network Bandwidth Choking

High traffic load produces transmission delays such that heartbeats across the named pipe timeout exceed thresholds for both ends causing termination.

Step-by-Step Diagnosis and Repair

With an understanding of the moving pieces behind an application pool’s fatal communication error, we can walk through bringing functionality back step-by-step:

Step 1: Review Event Viewer

Check Windows system and application event logs around the timeframe requests began failing to view fault bucket details, WAS service notifications, and process lifecycle events indicating where the failure occurred. Event IDs like 7000, and 7001 point to WAS. Archive full debug logs.

Step 2: Validate App Pool & WAS Configs

Dig into IIS app pool settings for the impacted site using the IIS Manager console confirming number of permitted worker processes isn’t capped unnecessarily low and other communication settings permit seamless WAS interop.

Also check WAS recovery actions, isolation mode, listener adapters and other global settings representing single points of failure.

Step 3: Inspect Security Permissions

Leverage SysInternals tools like Process Monitor to validate both application pool identity and WAS have necessary NTFS permissions to open communication channels like the appcffg.config file governing named pipe settings.

Step 4: Consider Application Changes

Code updates imposing more process workload due to features or inadequate resource allocation planning like CPU/RAM may contribute to exhaustion and communication pipes overflow.

Step 5: Restart WAS Service

A restart of Windows Process Activation Service can reset connections and request queues cleared of any transient state or data pipe corruption issues.

Step 6: Recycle App Pool

If WAS changes don’t restore functionality, cleanly forcing an application pool recycle often renews named pipe leases and re-registers communication channels.

Step 7: Reboot Server

While heavy-handed, an IIS server reboot eliminates any possibility of lingering OS/network stack faults interfering with restored Named Pipes communication flows.

With restored IPC between WAS and application pool processes thanks to corrected configurations, permissions repairs, upgrades for maximum pipe throughput, and component restarts, the former fatal communication breakdown now gives way to seamless site visitor experiences.

Preventing Future Activation & Communication Failures

While dealing with cryptic application pool errors makes any admin yearn for telepathy, saving precious debugging time requires getting out ahead of communication failures before customers call about unreachable sites:

Architect App Pools for Peak Usage: Right-size worker process count, memory, and CPU according to seasonal traffic patterns and future growth.

Enable WAS Health Monitoring: Catch process limit thresholds breached and heavy network saturation early.

Load Test Sites Post-Deployment: Validate pipe capacity and pool configuration changes play nice with WAS before subjecting infrastructure to real workload.

Follow Least Privilege Security: Reduce the risk of permissions-induced activation failures with strict controls.

Tune WAS Settings: Adjust timeouts accommodating increased app processing duration to allow flexible named pipe transmission.

In Closing

With vigilance around balancing stability, security, and performance across integrated Windows and IIS components serving requests, “fatal communication errors” don’t stand a chance!

Now that you can swiftly get to the bottom of application pool communication failures – no psychic abilities required – and dodge future issues, enjoy rediscovered confidence deploying web apps knowing a path to resolution exists even for vague messaging around process breakdowns.